When I visited Indonesia back in 2003, I spent most of my time on the island of Lombok. On my latest trip, I saved Lombok for the last three weeks. I sorta knew what to expect there, so travel planning was less of a hassle, as compared to Sumbawa or Flores/Komodo.

The phrase “Apa kabar?” means “How are you?” in Bahasa Indonesia. The answer is usually “baik” or “bagus”. So, I was wondering: How is Lombok doing nowadays?

Lombok Then And Now

I surely knew that a lot would have changed over the past 20+ years. So let’s start by drawing some visual comparisons:

Mandalika

Even when I first visited Lombok ages ago, I had heard about government plans to boost tourism on Lombok. Seems to make sense, considering the over-tourism on neighboring Bali.

Only now did I learn about the scale of tourism development. One of the drivers behind this seems to be the newly established Mandalika Special Economic Zone, which now covers a huge chunk of Lombok’s south coast. (According to OSM, it stretches between Kuta and Gerupuk.)

I guess that all of this is aiming at creating a (high-end) resort area, similar to Nusa Dua on Bali. However, development is not quite there yet – and there are several distinguishing factors. Notably, one of the first projects that they implemented is a motorsports race track, the Mandalika International Street Circuit (a.k.a. Pertamina Mandalika International Circuit a.k.a. Sirkuit Internasional Mandalika). Completed in the early 2020’s, it regularly hosts MotoGP races since 2023, and other international competitions.

This phase of development has also created a new road network in the area. Mostly multi-laned roads connecting at massive roundabouts. These are generally arranged in a rather confusing manner. Other developments – big hotels, golf course, amusement park – are lagging behind, so all the new roads feel ridiculously oversized and sometimes end in the middle of nowhere:

Imagery ©2026 Airbus, CNES / Airbus, Maxar Technologies,

Map data ©2026 Google

I’ve heard rumors that locals have been driven off their land for some of the ongoing construction projects. And there’s certainly some impact on nature (which includes mangroves). However, my overall impression is that this whole Mandalika thingy could be reasonably sustainable in the end. It’s certainly not a super-sized bridge-to-nowhere situation. And some of it seems to benefit the locals directly, not just those working in tourism.

But where does the name Mandalika come from? When I visited Lombok in 2003, I’d heard of place names like Kuta, Gerupuk, Mataram, Lembar, Mawun, Senaru, Gunung Rinjani, Segara Anak, Gili Trawangan, etc. In fact, I had visited most of these. But I cannot recall hearing of any place called Mandalika. So why is it being used so prominently today? I forgot to ask the locals before I left, so I had to look it up just now. Turns out that it’s named after Princess Mandalika, a character from Sasak folklore. Apparently, her story is still being commemorated by the annual Bau Nyale festival.

Gerupuk

When arriving on Lombok a couple of weeks ago, I decided to skip busy Kuta. Instead, I headed straight to Gerupuk, a small fishing village located in a deep bay a few kilometers further east.

I had visited Gerupuk before, because of its famous surf spots. But back then in 2003, we did not stay over night, because there just wasn’t any suitable accommodation there. Not even a cafe or a warung. There only was a small shack by the shore, where you could rent surfboards (from a very limited collection, not at all beginner friendly). I think that most houses had been built of wood and reed, though I missed the opportunity of taking photos of the village itself.

Much has changed since. By rule of thumb I’d say that Gerupuk today is similar to Kuta Lombok twenty years ago. There’s several guest houses, restaurants, warungs, mini-markets, surf-shops, etc. But most of it is at a fairly small scale and locally owned. There is also a couple of hotels nearby, but no oversized resorts yet:

I decided to stay at Edo’s Homestay, which is a great starting point for boat trips to Gerupuk’s surf spots. They have a nice restaurant right at the beach, plus surf and scooter rental. I liked it so much, that I stayed with them for my whole Lombok sojourn (about 3 weeks).

Wet Season Road Conditions

Like for most of the Lesser Sunda Islands, wet season hits Lombok between October and April. My whole Indonesia trip fell right into this period, and I was constantly on edge about it. Whenever heavy rainfall occurred (mostly in the evening or at night) I was thinking to myself: well this is it, it’s gonna be like this for the rest of the trip. But then there would be a couple of dry days and I’d forget about raining season altogether.

I do actually enjoy a moderate rain shower in the surf lineup, it can be rather peaceful. But I also wanted to move around by scooter, and wet season road conditions turned out to be challenging at times. Generally, Lombok has a pretty decent road network. Not just the shiny new roads of Mandalika, even small country roads between rice paddies and up the slopes of Gunung Rinjani are in good shape. But occasionally, the rains do have impact:

Unfortunately, local authorities didn’t seem overly eager to fix these problems right away. I guess that they’ll do a big round of cleanup at the end of wet season.

Tetebatu

One of my day-trips took me to the small village of Tetebatu. It’s situated on the southern slopes of Gunung Rinjani, right at the border of Rinjani National Park:

(It wasn’t sitting on that wall, but rather in an impressive spider web, which the photo didn’t capture.)

I didn’t take many pictures in the village of Tetebatu itself. It’s certainly a nice village, but it’s kinda spread out and most of the buildings simply blend into the surrounding trees. That said, I’ve only seen a small part of it and I may have missed something interesting.

Awang & Sade

Another day trip took me to Awang, the next big bay east of Gerupuk:

These tsunami escape route signs (as seen in that last pic) have been pervasive throughout my Indonesia trip. I’ve seen them in all coastal regions, be it on Bali, Lombok, Sumbawa, or Flores. And frankly, I’m not surprised that Indonesians are nervous about tsunamis after the disaster of 2004.

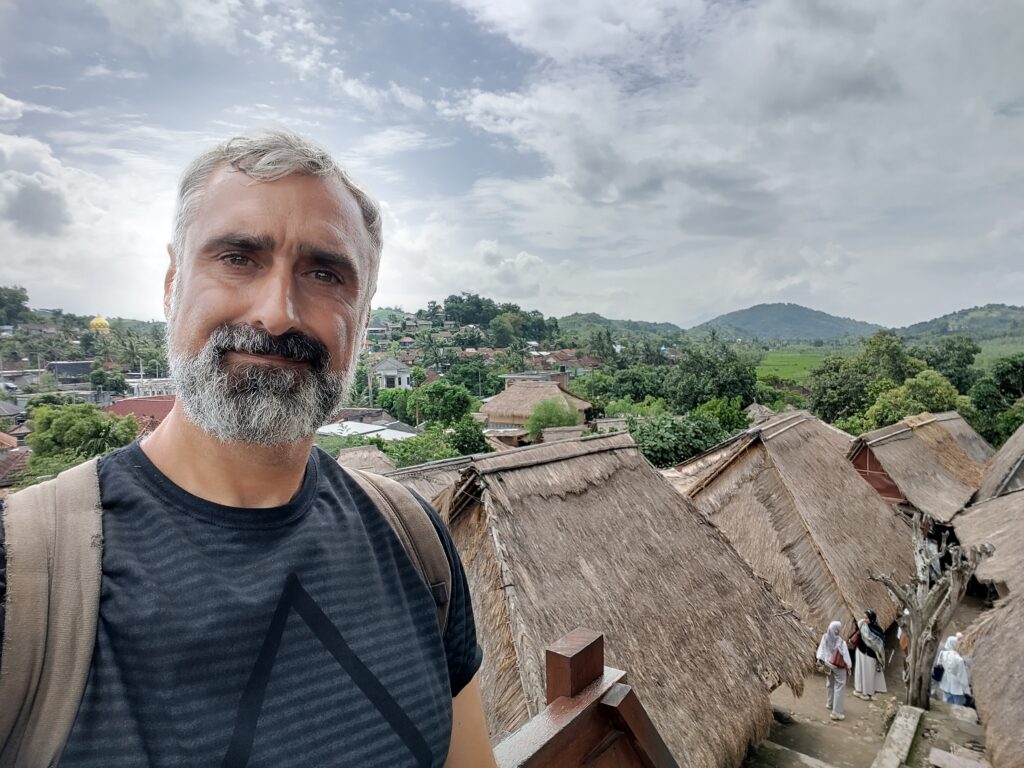

I didn’t stay long in the Awang area, just enjoyed the landscape and had a little snack at a roadside warung. Then I headed back west, bypassing Gerupuk and Kuta, and made my way to Sade. This is a small traditional village, about 6km inland from Kuta. Frankly, it’s a bit of a tourist trap, but I enjoyed it nevertheless:

I really love these straw roofs. On Bali they are often incorporated into modern buildings, in particular in touristy areas. But on Lombok, Sumbawa, and Flores they are pretty rare. The guide in Sade told me, that the straw cover needs to be replaced after around ten years. I guess tin roofs are less of a hassle in most contexts.

Lombok’s South Coast

Apart from the trips to Tetebatu and Sade, I mostly stuck to Lombok’s south coast. This is not one long beach like the one that stretches from Kuta Bali on to Seminyak and Canggu. Instead, it’s all hills, cliffs, capes, and bays. Many of these bays harbor amazing beaches (“pantai”). Here’s some of them, roughly from east to west:

Many of the above beaches have surf spots, but I haven’t tried all of them. Let’s have a look at those that I’ve surfed…

The Surf

Pantai Mawan is not famous for surfing, but there are small peaks at the flanks of the bay. I tried the right-hander on the western side. Short rides, but no crowds at all. Despite the many warungs at the beach, surf-rental opportunities are rare – had to ask around for a board.

One spot that I visited repeatedly was Areguling, the next bay west from Kuta. There’s a channel in the middle of the bay, with surf breaks on each side. Not too far to paddle out yourself, but you can get a boat-ride for a reasonable price, and there’s plenty of surfboards to rent. It’s a reef break, and the right-hander can break pretty fast even on smaller swells. The surrounding hills shield some of the westerly winds that seem prevalent during wet season. And it’s usually less crowded than the spots at Gerupuk.

There’s long mellow a-frame in the middle of the bay at Pantai Aan, not far from Gerupuk. This spot is popular with beginners and long-boarders. Here was the only time that I picked a proper long-board, a heavy 9 footer. Despite some wind, the spot worked surprisingly well. Took me a couple of waves to get the hang of turning such a big board though.

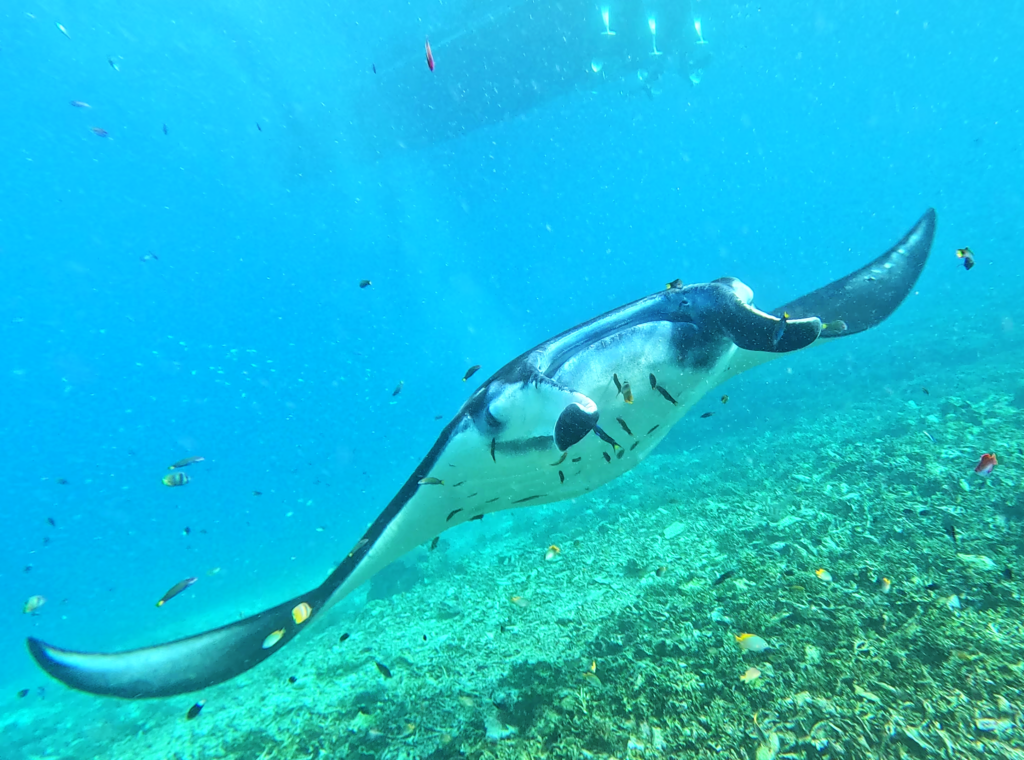

Having established my base right in Gerupuk, I stuck to the surf spots in Gerupuk bay on most days. All of these are best reached by boat from Gerupuk. I’ve visited Inside, Don Don, and Outside Rights, but skipped Outside Lefts (too far) and Kiddies (only works on bigger swells nowadays).

Most often, I’d end up at Gerupuk Inside, which worked better at low to mid tides. It’s fairly exposed to westerly side-shore winds, which can also create a bit of a current. I was too lazy to get up early and beat the wind, but usually it calmed down a little in the late afternoon, too.

Gerupuk Inside is an a-frame, though usually the right offers longer rides and an easier paddle out. That said, I had some excellent waves to the left. The main peak can be powerful, suitable for shorter boards. I was on a 7’6″ fun-board most of the time though.

Further down the line is better suited for long-boarders and beginners, so it gets pretty crowded. Many people book surf guides who push them into the waves, and have little regard for priority rules. At the main peak, surfers were more considerate on average. But the peak is a bit shifty, and I found it hard to tell whether people would go left or right. Even when both sides were clear, I sometimes struggled to decide which way to go myself.

There is a second peak about 100m to the west of Gerupuk inside. Some locals call it Coco, because of the coconut trees in front of it. I had one great session there, with just 5 people in the lineup. But this wave tends to get too soft when conditions aren’t just right.

Another spot, called Don Don, is about half way between Gerupuk village and Inside. When conditions check out, this a-frame works equally well in either direction. The crowd factor is similar to Gerupuk Inside. One day, there was a photographer around and I got some rare footage of surfing on Lombok:

Gerupuk Outside (more precisely: Outside Rights) is a little different. First off, the scenery is amazing, with a big cliff towering over the lineup. The cliff shields some of the westerly wind, and wind from north-west can create excellent off-shore conditions. Gerupuk Outside is more exposed to the ocean can pick up more swell than Inside or Don Don.

During my stay, there were usually three distinct sections at Gerupuk Outside. The first section produced huge waves (2 meters or more) but looked rather soft, with wide shoulders. Nevertheless, I skipped this section, fearing to get washed away when a bigger set rolls through.

The second section was smaller but tended to break faster and close out after a short ride. After catching a wave, I had a hard time paddling out again. Especially with a bigger board that cannot be duck-dived. On the other hand, I had trouble catching enough waves on shorter boards (less than 7 foot). I got a little scared of the stronger waves at times and got tired quickly by all the paddling.

The third section was yet smaller and a little mellower. And less prone to close out, so I could ride it out all the way to the right for an easy exit and paddle back out. I often opted for these more mellow waves, which worked well with the 7’6″ fun-board that I was using most of the time.

While Gerupuk Outside did draw massive crowds, these tended to spread out across the different sections. Less experienced surfers (and their guides) mostly picked the last section and surfers at the other sections were more respectful of priority rules. By the way, Gerupuk Outside worked best at high to mid tide. It got quite shallow at lower tide, but more advanced surfers could get faster breaking waves and even barrels.

Despite the huge crowds, Gerupuk is still a great surf destination. With so many spots, there’s always one that’s gonna work.

It’s a little annoying to depend on a boat all the time. But with some patience, there are enough people around with whom to share a boat. Prices are OK, too, for both board rental and boat charter – even compared to Bali or Sumbawa. That said, there’s too many middle-men involved and prices can vary widely. Once I had been around for some while and got to know the locals, we settled on a standard price that I found reasonable. I guess more aggressive haggling could reduce prices further, but there was no need to overdo it.

I’ve had a look at some online reviews recently, and some speak of a “Gerupuk Surf Mafia” that drives up prices and makes the overall surf experience more painful. Some people claim that they have been pressured to book a surf guide or lessons, and experienced hostilities in the lineup when going without a guide. This has never happened to me, even though I never booked a guide.

It helps knowing the different spots and having a general idea which one works best for your skill level and current wave conditions. Otherwise you might end up on a boat towards a session that you will not enjoy. When in doubt, you can have a look at multiple spots first, or change to a different spot after a while. (Most boat captains ask for a small extra fee in such situations.)

As mentioned above, some of the surf guides have little concern for priority and push their clients into waves that are already taken. But this is no different from the surf guides at an average spot on Bali. Overall, the vibe stayed friendly and welcoming in every lineup that I’ve visited on Lombok.

Odds And Ends

My first trip to Lombok back in 2003 has been very special to me. But I’m happy to say that I still enjoyed Lombok now as much as back then.

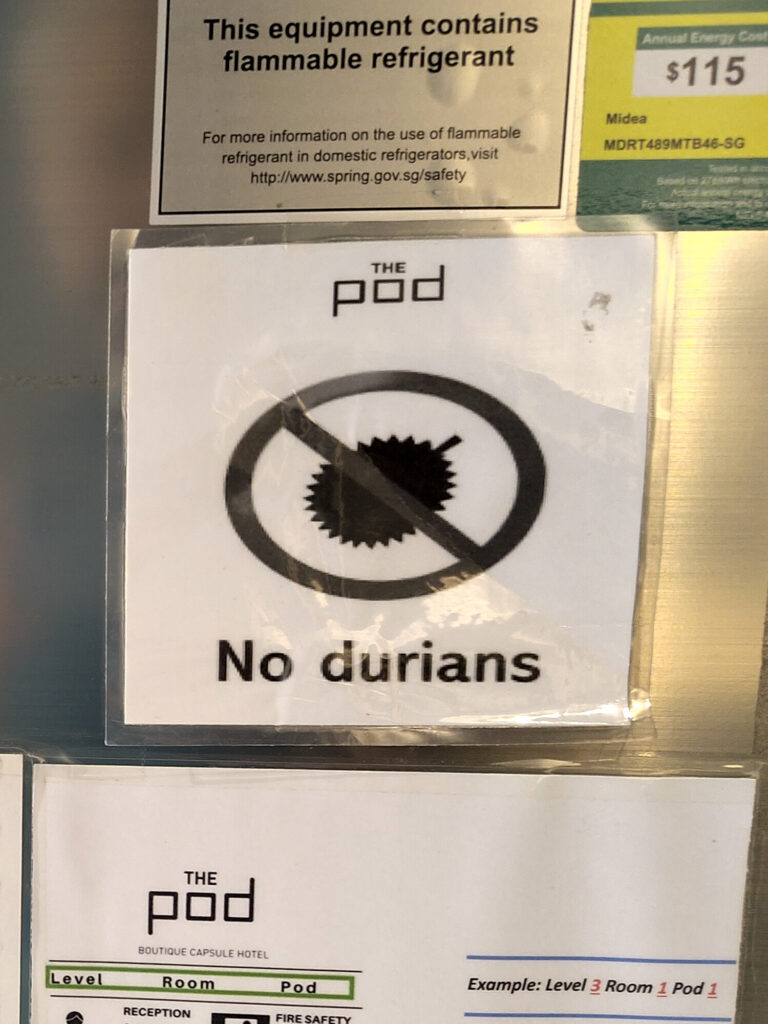

So I’m ending this post with some of the creative signage that I’ve observed on Lombok:

Apa kabar?

Bagus!